Jan-Erik Schmitt of Vision Components compares embedded vision today with that of 25 years ago when the firm was founded

Vision Components started out 25 years ago as an embedded vision company. It’s just that 25 years ago the term ‘embedded vision’ didn’t exist.

The technology surrounding embedded vision has inevitably changed a lot in 25 years, but the principles – a programmable imaging device – remain the same. ‘We called our product a smart camera [when Vision Components started],’ explained Jan-Erik Schmitt, vice president of sales at the firm, ‘because the knowledge you needed 25 years ago to create an embedded vision solution was high, and there were only a few companies that were able to develop a product.’

Vision Components was founded in 1996 off the back of the positive feedback Michael Engel received when he took that first smart camera to the Vision show. Engel had already had success with a previous industrial imaging company, Engel und Stiefvater. He then set about building the smart camera that would launch Vision Components, the VC11. The goal was to lower the cost of industrial imaging – installations at Engel und Stiefvater were complex and costly, using hardware that was as big as the tower PCs of the 1990s – with a compact system where the image capture and playback could be controlled by software.

The VC11 used a single core digital signal processor from Analog Devices, clocked at 32MHz – today, the company is working with quad-core Arm 1.2GHz processors. Engel assembled the first 250 VC11 cameras himself to get a feel for the effort involved and the potential production costs.

‘It was nice at the beginning because there was no competition [for smart cameras],’ Schmitt said. ‘This changed over time.’

One of the first companies to do something similar, albeit in a different way, was DVT, later bought by Cognex. Vision Components’ VC11 was a freely programmable camera onto which customers could install their own algorithms. Most of the other products included a software suite, which meant a higher initial price but lower development cost.

Schmitt said that the first four or five years Vision Components’ customers were OEMs along with integrators, which did their own programming and could then sell the complete solution. But starting from the early 2000s in projects with low volumes it didn’t make sense for integrators to invest lots on programming. So, Vision Components’ market switched almost completely to OEM customers, machine builders or system developers with certain competencies – companies that would sell machines for a number of years and so could invest in vision software development.

In the early 2000s, vision sensors came onto the market which made smart cameras even easier to use. Vision Components’ own offering in this area was the VCM30 with a waterproof lens and lighting. But the company also collaborated with a lot of firms wanting to develop vision sensors, including many big players in the industrial sensor market. A lot of those first generation of vision sensors were based on Vision Components hardware, Schmitt said.

Then, from the mid-2000s, it was just an evolution in technology, according to Schmitt. This was until 2018 where a combination of things, influenced by the consumer market, meant everything changed. A new form of embedded vision started to take hold, fuelled by development in processors – GPUs, FPGAs and others – which also opened the door for neural networks and new applications based on AI. ‘This is the next step for machine vision and image processing in general,’ Schmitt said. He added that, largely, industrial vision has been separate from the consumer sector, but that there’s now more of an overlap, using consumer electronic technology for semi-industrial applications.

Michael Engel founded the company 25 years ago. Credit: Vision Components

‘We are in the middle of this now,’ he said. ‘We’ve already seen a lot of consolidation in the machine vision industry, in part as the founding fathers reach retirement age.

‘It’s a shake-up of industries and companies; an evolving market with a lot of consolidation around,’ he added.

Twenty five years ago it took specialist knowledge to build the VC11; today, an embedded vision solution can be made by students at university or hobbyists. All you need is a Mipi camera, a computer board like a Raspberry Pi, and some of the ready-to-use software tools that are now available. ‘If you wanted to do this 25 years ago, it would have needed months of work,’ Schmitt said. ‘Plus, I don’t think it would have been possible because you’re using AI, and the computational power needed was not available 25 years ago in the embedded sector.’

That’s not to say developing a commercial embedded vision device is easy. Adapting and testing a system in the real world takes time and effort, and in an article for Imaging and Machine Vision Europe earlier in the year, Schmitt estimated that it could take between one and four years to build a commercial embedded vision product. Prototyping is reasonably straightforward, but validating the product and bringing this to mass market can take time.

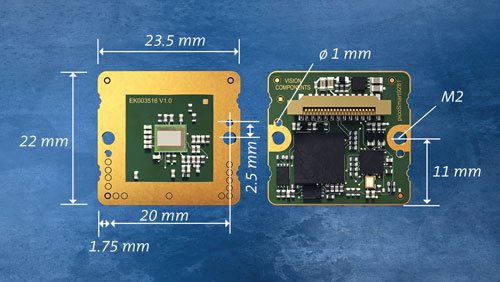

Vision Components now has a line of Mipi cameras and other embedded vision modules, including its VC PicoSmart embedded module, launched earlier this year. One of the smallest embedded vision systems out there, VC PicoSmart is the size of an image sensor module – 22 x 23.5mm – and contains a one-megapixel global shutter CMOS sensor, an FPGA module, an FPU processor, and memory.

Schmitt said its Mipi modules are generating a completely new market segment, while the company continues to provide smart cameras for the industrial sector. ‘At the moment these two segments are running in parallel,’ Schmitt said. ‘This will stay like this for a few more years because industrial will always need the long-term availability of components.’

Applications that Vision Components’ Mipi modules are opening up include those using drones, smart glasses with eye tracking, Schmitt noted, and also for pathology testing. Vision Components has been in touch with companies developing blood analysis devices, for example, but at a price that is much cheaper than what exists on the market now.

‘These are existing markets with a new approach,’ he said, ‘but also bringing higher volumes, which is interesting for companies. That’s something we’re going to see quite a lot in the coming years.’

Schmitt said that, even on the industrial side, there are now more companies thinking of using vision because the price is coming down. He gave the example of Vision Components’ laser profiler, which is not new technology, but it’s at a lower price point – also with lower performance – than high-end laser triangulation sensors.

VC PicoSmart measures just 22 x 23.5mm. Credit: Vision Components

‘One sector where we’re quite strong with this technology is in 3D angle measurements in metal sheet bending machines,’ Schmitt said. ‘It’s a niche market, but our performance is more than enough and it’s much cheaper than the high-end profilers. We’re finding new markets or existing markets that were not open for machine vision because of the price point a few years ago.’

Schmitt added that Vision Components has gone from zero competition when it first started to a point where now the firm’s biggest competitor is its potential customers. ‘Large companies have their own teams where they can develop their own cameras and hardware,’ he said. ‘This has changed completely; 20 years ago our customers were far away from designing their own components.’

He went on to say, however, that most of Vision Components’ embedded vision components customers are focused on processing hardware and algorithms, and don’t want to touch camera development and image sensor integration. In addition, camera technology is evolving quickly, with new sensors being released regularly. The camera module – in terms of camera boards – has become more of a commodity that is integrated into a system, Schmitt said.

He concluded: ‘Twenty five years ago you could already do nice things with smart cameras, but you had to put a lot of effort into the programming. Today, in a lot of applications, there’s no need to optimise the code. If it doesn’t run fast enough, you just upgrade to the next CPU. It opens doors to many more applications because it’s so much easier.’