A Chinese web service company, Baidu, has launched a smartphone augmented reality (AR) platform, which is the culmination of a 3D vision research project that began nearly two years ago.

DuSee is a collaboration between Baidu Research’s Institute of Deep Learning (IDL) and Baidu’s search products. The platform uses sophisticated computer vision and deep learning to understand and then augment a scene.

The work is the latest example of applying computer vision and deep learning techniques to further augmented reality on mobile devices, work which could filter down to industrial vision in the future. Jeff Bier, founder of the Embedded Vision Alliance, commented in an article for Imaging and Machine Vision Europe that deep learning could alter the way machine vision algorithms are developed.

DuSee will be integrated into Baidu’s flagship platform apps, such as the Mobile Baidu search app, which has a user base of hundreds of millions of monthly active mobile users.

‘DuSee is a natural extension of Baidu’s AI expertise,’ commented Andrew Ng, chief scientist of Baidu. 'The path to better AR is through better AI.’

Yuanqing Lin, director of Baidu Research's IDL, added: ‘Many smartphone AR apps today work by “pasting” a cartoon on top of the camera image, regardless of that image’s contents. The next generation of AR apps will use AI to understand the 3D environment, and create virtual objects that have rich interactions with the user and the real world.’

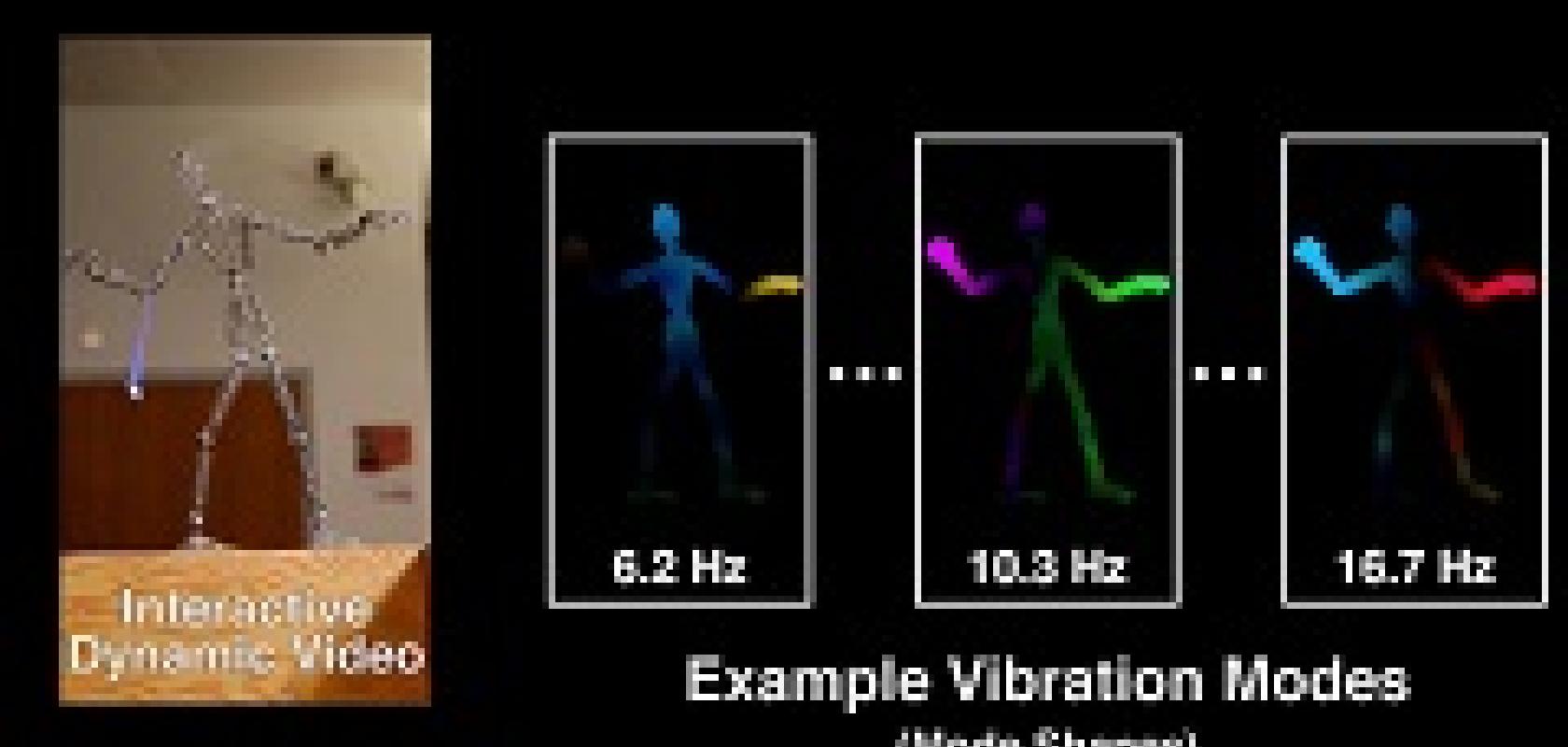

This ‘next generation’ may be closer than expected due to research being conducted at MIT. A team at the institute’s Computer Science and Artificial Intelligence Laboratory (CSAIL) has developed an imaging technique called Interactive Dynamic Video (IDV) that lets users reach in and ‘touch’ objects in videos.

Using traditional cameras and algorithms, IDV looks at the tiny, almost invisible vibrations of an object to create video simulations that users can interact with in a virtual environment.

‘This technique lets us capture the physical behaviour of objects, which gives us a way to play with them in virtual space,’ said CSAIL PhD student Abe Davis, who will be publishing the work this month for his final dissertation. ‘By making videos interactive, we can predict how objects will respond to unknown forces and explore new ways to engage with videos.’

Davis said that IDV has many possible uses, from filmmakers producing new kinds of visual effects to architects determining if buildings are structurally sound. For example, he showed that, in contrast to how the popular Pokémon Go app can drop virtual characters into real-world environments, IDV can go a step beyond that by actually enabling virtual objects (including Pokémon) to interact with their environments in specific, realistic ways, like bouncing off the leaves of a nearby bush. He outlined the technique in a paper he published earlier this year with PhD student Justin Chen and Professor Fredo Durand.

A video demonstrating this technique can be viewed here.

Further Information: