Caption: Fraunhofer IOSB's ABUL system provides a multi-screen interface that visualises the multiple inputs from UAV sensor systems

Designers of airborne surveillance systems have to deal with size, weight and power restrictions, just as with many other security and military devices, but embedded computing performance is helping overcome some of these difficulties. Unmanned aerial vehicles (UAVs) are normally equipped with an array of imaging and sensor technology, which outputs a continuous stream of data to ground-based operation teams tasked with managing and making sense of the information.

A series of projects undertaken by the Fraunhofer Institute of Optronics, System Technologies and Image Exploitation (IOSB) is working to optimise the generation, flow, visualisation and processing of data in airborne security applications. The group is creating resources for designers to produce their own low-power, high-performance embedded systems capable of real-time image processing.

Intelligent interfaces

To help UAV operators during critical missions, Fraunhofer IOSB has developed ABUL, a dual-screen interface capable of optimising and visualising data from multiple imagining systems, as well as performing a range of image processing tasks in real time and post-flight.

ABUL has been applied to the six-sensor system onboard LUNA, a UAV currently in use by the German army and Swiss air force that contains an array of infrared and other optical sensors. The first of the two screens displays the video and data streams of each operational sensor, in addition to controls for real-time image processing. The second screen displays processing results and controls for post-processing.

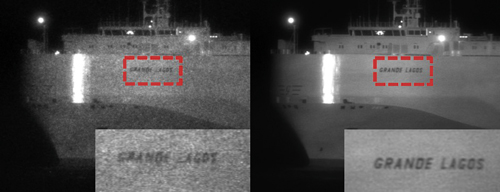

Fraunhofer IOSB's ABUL system can fuse several consecutive images in order to increase spatial resolution and reduce noise

The video data handling capabilities of ABUL include live image stabilisation, enhancement, optimisation, zoom and rotation. The system can also detect changes in real time, fuse several consecutive images together to increase spatial resolution and reduce noise, as well as generate multi-resolution images via multi-focal cameras. Stereo 3D images can also be interpreted. The modularity of ABUL makes it easy for Fraunhofer IOSB to integrate a whole range of algorithms for surveillance, such as those for identifying and tracking moving objects, or for detecting forest fires using infrared or hyperspectral imaging.

According to Norbert Heinze, machine vision group leader for Fraunhofer IOSB’s department of video evaluation systems, the main challenge when developing ABUL was handling the volumes of data generated by higher-resolution systems with complex processing algorithms. The institute is currently integrating an object detection and tracking algorithm into ABUL, designed for interpreting data produced by wide area motion imagery (WAMI) sensors.

ABUL is designed to work with systems conforming to the NATO Digital Motion Imagery standard STANAG 4609 used by the European armed forces, meaning any current and future UAV sensory systems also conforming to it could be visualised by Fraunhofer IOSB’s dual screen interface.

Sensing change

Algorithms that highlight changes occurring in a scene are commonly used in airborne security applications. Previous versions of these algorithms have used differences in image intensity to detect changes, according to Dr Thomas Müller, of Fraunhofer IOSB’s department of video evaluation systems. More recent methods have used differences in colour to identify variations in a scene, although object shadows, which can vary significantly from one drone flight to the next, can confuse the algorithm.

Wide area motion imagery (WAMI) sensors are being integrated in ABUL to image larger areas at lower frame rates. Credit: Fraunhofer IOSB

Müller has devised a new technique to optimise change detection, and will present a paper – Improved Video Change Detection for UAVs – at the upcoming SPIE Defence and Commercial Sensing conference in Orlando, Florida, taking place from 15 to 19 April.

‘In order to exclude shadow variations from the change detection result, the effect of shadows is learnt in the lab colour space and then the dedicated shadow pattern is filtered out,’ Müller explained. ‘This pattern models the relationship between image intensity reduction and colour shift towards blue for cast shadows. Sunlight variations and different lighting conditions are compensated, to some degree, by performing a local intensity adaptation, which automatically equalises all larger areas in the image with different intensities.’

According to Müller, his improvements enable essentially fully automatic change detection in airborne video footage, which will aid security applications such as large area surveillance, and building and power plant surveillance, or protecting soldiers against improvised explosive devices.

Embedded efficiency

Real-time image processing algorithms, such as change detection, are now being implemented on embedded systems within UAVs to ensure that only relevant data, rather than an entire high-resolution image stream, is recorded and transmitted. This does, however, place greater processing and power demands on UAV processors, which poses a challenge for system designers.

While low-power image processing solutions consuming less than a watt are available, most of them lack performance, according to Michael Grinberg, also of Fraunhofer IOSB’s department of video evaluation systems. At the other end of the spectrum, high-performance image processing systems that consume hundreds of watts of power are available. Grinberg noted that there is a lack of processors with a good trade-off between decent performance and low power – between 1W and 15W – especially at a reasonable price point for small series production.

To this end a consortium consisting of seven partners, including Fraunhofer IOSB, has established a project called Towards Ubiquitous Low-power Image Processing Platforms (TULIPP) under Horizon 2020. TULIPP aims to provide vision-based system designers with a reference platform consisting of hardware, an operating system, and a programming tool chain for building embedded systems.

‘TULIPP develops a reference platform that defines implementation rules and interfaces to tackle power consumption issues, while delivering efficient computing performance for image processing applications,’ Grinberg explained. ‘Using this reference platform will enable designers to develop an image processing solution at a reduced cost to meet typical embedded system requirements: size, weight and power (SWaP).’

The goal of the TULIPP reference platform is to serve as both a proof of concept, as well as a baseline that developers can reuse or modify to fit their needs. TULIPP solutions will enable developers to implement real-time image processing algorithms and adapt them to low-power platforms. By using existing industry standards, the TULIPP reference platform also shows developers how best to select suitable standards and combine them to build an energy-efficient image processing system.

Additionally, through a set of guidelines, the reference architecture of TULIPP will help developers select the relevant combination of computation and storage resources and organise them on their boards to achieve best resource utilisation while minimising energy consumption. The architecture also includes resource management capabilities that will coordinate and switch selected computing resources between a state of efficient activity and a state of non-consuming inactivity, according to Grinberg.

The TULIPP project is set to conclude after three years in February 2019, where the resulting reference platform will be bundled together with other project outcomes into a starter kit that will serve as a basis for developing image processing applications. The kit will be based on Xilinx’s Zynq Ultrascale+ board and contain a power-aware, real-time operating system, toolchain support, sample applications and a TULIPP reference platform handbook with guidelines for optimum computing resource selection.

To showcase the platform’s ability, the TULIPP consortium has selected three project uses from the automotive, medical and UAV domains that have challenging requirements and pose interesting design trade-offs typical of low-power, high-performance, real-time image processing. For the UAV domain, Fraunhofer IOSB will estimate depth images from a stereo camera setup and use them to avoid objects in the flight path.

‘We focus on a simple user-friendly, yet sophisticated and adjustable, depth-map generation, running at real time on embedded hardware with low-power consumption. Such measurements have a vast area of application across multiple domains,’ said Grinberg.

Ultimately, according to Grinberg, the TULIPP reference platform will simplify and accelerate the development of specialised solutions for security applications. ‘It will be easier to build energy-efficient image processing platforms and systems, which will make our life safer,’ he concluded.

Smaller sensors, sharper sight

Infrared firm Xenics is looking to improve airborne security applications through the development of smaller, higher-resolution shortwave infrared (SWIR) image sensors, which will aid in applications where hidden threats are obscured by smoke, fog or camouflage among vegetation.

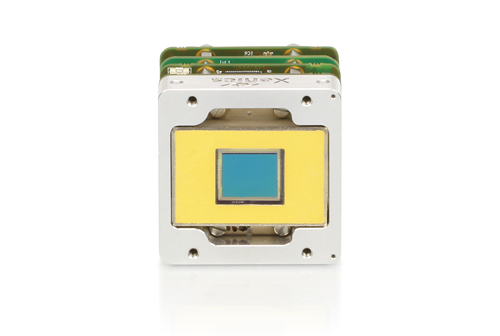

‘For the shortwave infrared, where we make our own detectors, we are working towards small sensor and pixel sizes in order to reduce the size of the sensor area, which will reduce the size of the optics needed and allow our cameras to be made smaller,’ explained Raf Vandersmissen, product manager at Xenics. ‘Airborne security applications will certainly benefit from a lot smaller sensor sizes. High resolution shortwave infrared imaging is an important market, especially for surveillance.’

SWIR cameras can see through haze or fog. Credit Xenics

The firm sees the next logical step in SWIR sensor development to be higher resolution of 1 and 1.3 megapixels, according to Vandersmissen, a considerable increase from the 320 x 256 or 640 x 512 pixel resolutions it currently offers.

‘A challenge in using infrared for security applications is that these applications often require a large field of view and therefore a high resolution,’ commented Vandersmissen. ‘While this isn’t a problem in the visible wavelengths, infrared has always been a low-resolution technology. The higher resolution shortwave infrared sensors that will come on the market, will therefore allow shortwave infrared detectors to enter more security applications.’

Xenics infrared camera cores are compact and comsume little power

Xenics anticipates these detectors will be used in security applications in which bad weather conditions, such as haze or fog, might be obstructing the viewing target, as visible systems cannot be used in those circumstances and LWIR wavelengths offer sub-optimal imaging quality, according to Vandersmissen, especially over longer ranges.

The firm also supplies thermal imaging camera cores in the LWIR for security uses such as search and rescue, border surveillance, forest fire detection and reconnaissance.