At Intertraffic China 2015, which took place 31 March to 2 April in Shanghai, companies demonstrated that monitoring the road is much more than just measuring speed. Modern imaging solutions stretch far beyond traditional traffic applications; developments in resolution, speed and processing power have allowed vision manufacturers to design smarter, smaller, and cheaper systems with significantly increased capabilities.

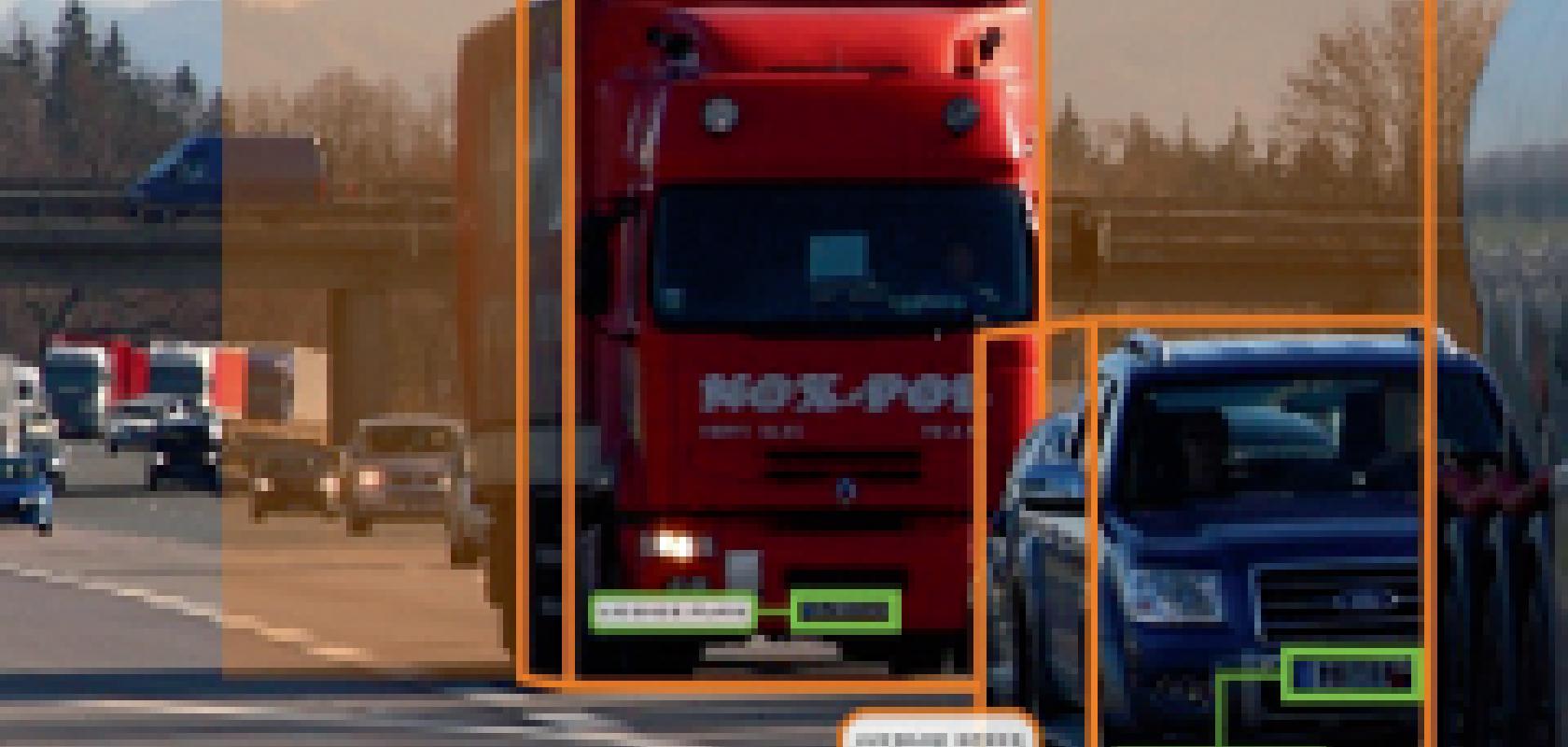

Current intelligent transport systems (ITS) are capable of monitoring multiple high-speed events simultaneously, serving as an all-in-one solution for traffic authorities. Launched at the Vision show last year, the T-Exspeed intelligent transport system from Italian company Kria can measure a range of different factors across six lanes simultaneously. ‘Any vehicle trajectory or every visible feature on the vehicles can be automatically recognised and stored,’ said Stefano Arrighetti, CEO of Kria. ‘For example, the device already detects wrong way, change of lane, tailgating, forbidden turns and so on. It recognises license plates, dangerous goods placards, vehicle colours and classifies vehicles on the base of their 3D sizes.’

And, because the system was designed as an open architecture, it is possible to add new functions after installation. ‘Very often we develop “on demand” customised functions that we could not even foresee when the device was sold and installed on the road,’ explained Arrighetti, adding that since its launch, the software has been upgraded to include functions such as detecting vehicles that fail to stop at intersections and a link to the national black list database, among others.

To monitor six lanes simultaneously, the challenge is to keep up with processing the huge amount of uncompressed data from the stereo cameras, Arrighetti remarked. ‘We have to quickly locate vehicles inside the camera field of view. After doing so, an important challenge is to be able to simultaneously track in parallel multiple moving targets. Sometime within the six lanes there are more than 10 vehicles at the same time,’ he said.

At a higher processing level, another important aspect is to measure speed with the highest possible accuracy. ‘To do this, sub-pixel algorithms are necessary because even a fraction of a pixel makes the difference. In terms of computational effort, this is like using sensors with higher than their nominal resolution,’ Kria added.

The capability to monitor six lanes at a time took years of development, according to Kria. The system introduced in 2014 employs Prosilica GT4907 and GT6600 cameras from Allied Vision, which have 16 and 29 megapixel resolution respectively, and full frame sensors.

Kria’s first multi-lane system was designed in 2008, capable of observing two lanes at a time. It contained a 1.4 megapixel Prosilica GC1360 camera. ‘It was a stereo device with 100cm baseline, able to monitor two parallel lanes with one per cent speed error. The long baseline was needed to achieve the desired accuracy,’ Arrighetti said.

In order to increase the capability and measure three parallel lanes, the company released another version of the system in 2010, which featured a 5 megapixel camera and a reduced 66cm stereo baseline.

For the third and current version of the system that was launched at the Vision show, Kria’s engineers managed cut the stereo baseline down to 29cm while keeping the speed accuracy fixed at one per cent.

To improve the instrument’s capability while maintaining the same level of accuracy proved a huge challenge, especially as the distance of vehicles in the camera field-of-view increases in proportion to the number of lanes. ‘To keep the same three-dimensional measurement accuracy we would also increase the stereo baseline proportionally. But Kria’s R&D challenge was that we could neither increase nor keep the same baseline,’ commented Arrighetti.

Another difficulty was to scale down the technology in order to make the device mobile without compromising performance. ‘To make the device actually portable and to install it on a car, we succeeded in cutting it by more than 50 per cent,’ Arrighetti said. ‘This is the final challenge: to realise a system that, without relaxing accuracy requirements, fits into a car without any problem.’

The improvements made in sensor resolution in recent years played an important role for increasing the number of lanes from two to six. ‘To cover six lanes we need more pixels in order to ensure the same accuracy. To achieve the T-Exspeed v.3.0 result of six lanes monitoring we must use very high-resolution and fast readout sensors,’ he said. ‘This time we have designed scalable mechanical and software architectures, forward compatible also with future sensors.’

Targeted solutions

For applications where it is not necessary to observe every type of incident, targeted systems are being developed to serve one specific function, rather than several, to provide a more cost-effective option.

This was the case for Eutecus, a video analytics solution provider, which developed a low-cost instrument for detecting red-light violations. For this application, the company partnered with Teledyne Dalsa, who provided a Camera Link version of its Genie TS cameras to be used with Eutecus’ Bi-i Smart Cube platform, a multi-core video analytics engine.

The analytics engine that operates the system was embedded with the camera so that the entire solution could be installed above ground, providing authorities with a simplified solution that is less costly than traditional traffic monitoring applications.

Although the camera system gathers the same type of images used by more conventional traffic monitoring applications, the data is parsed so that only the red light violations are captured. In other words, the Genie TS camera acquires frames continuously, but images in which no violation occurs are discarded. In this application, the camera provides the primary video analytics source, monitoring both the traffic light and the traffic itself.

Typically, the Genie camera acquires images of both the traffic light and the vehicle in relation to the intersection stop line. If the images of the traffic light and the vehicle/stop line don’t align as they should, indicating a violation, then the picture is sent to an operator.

The scene analysis is combined with radar data, and a second, low-resolution video camera is used for providing documentation to verify cases when a driver disputes a ticket. The system is also capable of capturing multiple events simultaneously, such as if more than one car runs a red light at the same time.

Designing a system targeted at one application is also allowing manufacturers to develop smaller, stand-alone automatic number plate recognition (ANPR) systems.

Last year, Vision Components introduced its ANPR Carrida software engine, which, more recently, it has combined with a camera with on-board processing, thus providing an embedded system much smaller than conventional traffic solutions for ANPR.

‘The clue with the Carrida Cam embedded system is that no other hardware is necessary – not even a PC. All processing is done directly and immediately on-board the CPU camera board,’ said Jan-Erik Schmitt, vice-president of sales at Vision Components.

The system is capable of recognising number plates in several lanes simultaneously, as well as in other applications where ANPR is relevant, such as access control.

The camera employed in the system is from the company’s Arm-based VC Z series, which was introduced at the Vision show last year. It features five different CMOS sensors with global shutter and a resolution of up to 4.2 megapixels.

To ensure image quality when capturing fast-moving objects, such as cars on the motorway, the camera’s electronic shutter mechanism is important. In a rolling shutter sensor, all of the pixels in one row of the imager are exposed one line at a time, starting from the top and scrolling down over the sensor. Therefore, if the camera is capturing a fast moving vehicle, and a rolling shutter is used, the exposure will start when the car is in one position, and by the time the exposure reaches the bottom line, the vehicle would have moved. This can cause unwanted effects on the final image.

By using a global shutting mechanism, as in the VC Z camera model, the entire imager is reset before integration to remove any residual signal in the sensor wells. Therefore, all of the pixels are exposed at the same time, providing a better quality image.

The road ahead

During the next five years intelligent transport systems will advance to incorporate a wider range of functions that spread across different market sectors, according to Kria’s Arrighetti: ‘In my vision, enforcement, security, pay toll and statistic applications shall merge into a single multi-purpose device. The end user will no longer need to install many single-function devices on the same road: a device conceived only for violation detection, a traffic webcam, an ANPR camera, a vehicle counter sensor, and so on,’ he stated. ‘There will be one device and several control rooms, each one monitoring and managing different traffic information collected by the same multi-purpose device.’

And, even the way the data is dealt with on the other side will evolve to become more efficient; central stations may not necessarily be a physical room because the clients have the software installed on devices such as tablets or smart phones, Arrighetti added. ‘Doing so means that the drivers will also be able to access some information provided by this open architecture,’ he said. ‘Technically this is already possible, but also the ITS regulations and standards shall evolve to such new available technologies.

‘Looking at the specific field of machine vision, it is already evident that, among the so-called “internet of things”, many of the next “things” will be ITS related: cars, traffic signs, on-board mobile units will all be internet connected and interoperable units,’ Arrighetti continued. ‘We must not defend our own proprietary solutions, but fearlessly open to the integration with other emerging technologies.’

Jessica Rowbury is a technical writer for Electro Optics, Imaging & Machine Vision Europe, and Laser Systems Europe.

Jessica Rowbury is a technical writer for Electro Optics, Imaging & Machine Vision Europe, and Laser Systems Europe.

You can contact her on jess.rowbury@europascience.com or on +44 (0) 1223 275 476.

Find us on Twitter at @ElectroOptics, @IMVEurope, @LaserSystemsMag and @JessRowbury.